How do you know you’re talking to Google when you navigate to www.google.com with your browser?

It might be better to answer a different question: why would you be talking to anyone other than Google when you navigate to www.google.com with your browser? After all, I summoned them using the appropriate URL; who else could possibly respond?

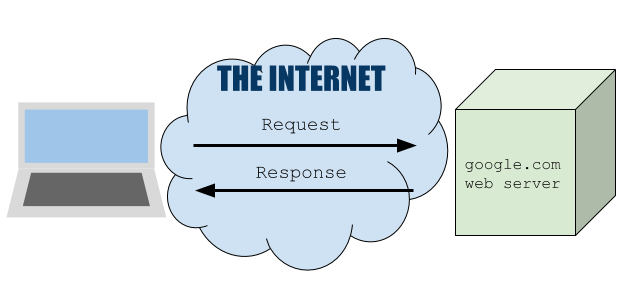

Each website assigns some computer out there in the world to answer incoming requests for its content. Viewing a website on your device entails successfully sending a request to that specific Internet-connected web server (i.e. the assigned computer) and then receiving the server’s response. Requests arrive at the server, wherein they are processed and responded to in the form of a web page. It’s like a company selling goods via mail order and orders coming into the company’s mailbox. Requests for goods physically arrive at the mailbox, the requests are processed, and the company sends the requester the goods they asked for.

At a high level you can think of the journey taken by your request for google.com like this:

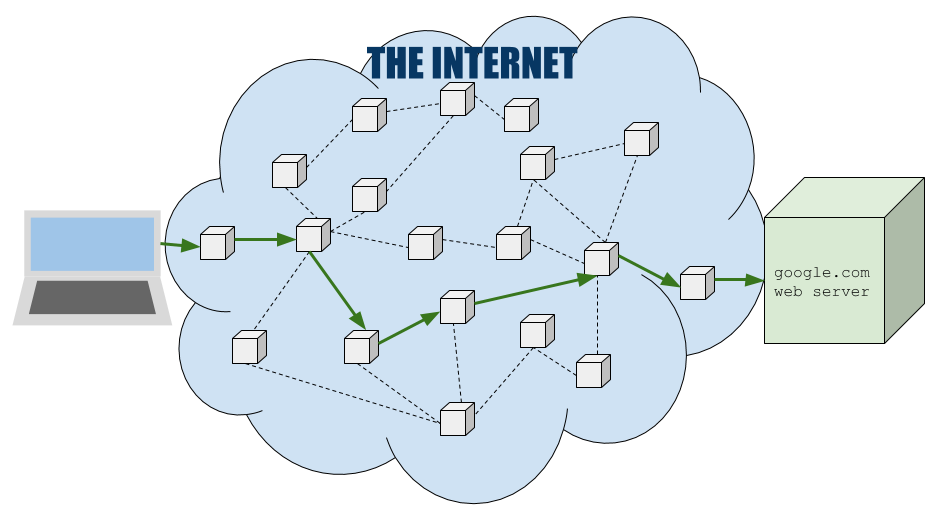

But on a slightly lower level your request actually looks like this:

Your request for a website is not sent directly to Google, it is passed along by multiple physical network devices that comprise the Internet. Every request you make for a website takes a similar multi-hop journey to reach its assigned web server, and thus multiple intermediate devices lie between you and the website you want to access. Without any security measures, a bad actor in control of some node or connection along the route may be able to read and store your communications, pretend to be Google, or alter your request. It’s like the game Telephone, where an original phrase is passed via whisper along a string of people. Someone among the string can ignore what they heard and inject their own message, and the final player is unable to detect the change. So you might not be talking to Google because the Internet is a large network of interconnected computer hardware and your communication with them is handled by a series of intermediaries.

Most of us presume we are low value targets and that the risk of interference in our web activity is consequently tiny enough to warrant ignoring, like a person living in a fifth floor apartment declining to pay for flood insurance. For now, bracket the likelihood of such interference or the possible motivation of some theoretical bad actor. We are all heavy Internet users and our activity touches important and sensitive aspects of our lives: our banking, our health, our private conversations, our photos. Instead of a request for Google’s homepage, the data you send through all those intermediaries may be your bank password or a nude photograph. The Internet is not magic, and it behooves us as users to understand its basic structure and attendant risks.

I have hopefully demonstrated a reasonable need to verify that the information we receive is indeed from Google. I want to feel more confident that Google is the author of the messages coming into my device after I navigate to google.com. We actually encounter a similar scenario in our everyday lives, namely logging into our personal account on an app or website. When you log into an account, you present your identity in the form of an email address or username along with your password. You are providing evidence to the service that you are who you claim to be because you know some secret information only known to yourself and the service: your password. You are increasing the service’s confidence that the user they are communicating with is actually the account owner. We want the inverse of this pattern; we are interested in Google providing evidence that they are who they say they are, and not some attacker between my device and their web server.

Perhaps we can try reversing the normal password relationship we have with online services and make Google set up a password with you. Only you and Google would know the password. Google can send the password alongside their responses for your browser to verify, and the browser can display big red alarms if the password is wrong. This is a decent idea but it has a serious flaw: how do you establish the password with Google? If the password is how you verify that incoming messages are from Google, how can you trust that the original password setup process was performed with Google and not some bad actor?

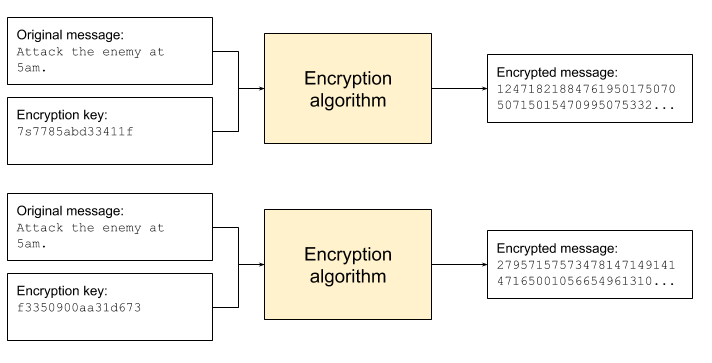

This problem is mostly solved by encryption. Encryption entails obfuscating a message in an attempt to restrict viewership of its contents only to authorized recipients. An encrypted message looks nothing like the original message, it will look like a random series of numbers and letters. Encryption relies on pieces of information called keys. An encryption key is used as an input to an algorithm that encrypts a message in a way that is custom to the key. That is, the same original message encrypted by the same algorithm but using different keys will yield completely different results.

An encrypted message is useless if the original could not be learned! Reversing the process and revealing the original message is called decryption. Correctly decrypting an encrypted message requires knowing the decryption key. Some types of encryption use the same key for encryption and decryption, and other types use distinct keys. If you send an encrypted message you can be confident that only people possessing the decryption key can read it; maintaining privacy entails restricting possession of the decryption key. Attempting to decrypt a message using an incorrect decryption key will yield something other than the original message. Decryption key possession is tantamount to reading ability. If someone other than your intended recipient learns the decryption key and gains possession of your encrypted message, they can read your original message. A weakness of this scheme is that a bad actor in possession of your encrypted message can attempt a “brute-force attack” and simply attempt decryption with every possible decryption key. In practice a well-configured encryption implementation will have so many possible key values that this approach is infeasible because trying all the keys with a normal amount of computing power will take longer than a human lifetime.

To help us along in seeing how encryption can help confirm you are talking to Google, consider a simpler scenario. You and your friend Alice decide to set up a secure communication channel so that nobody can snoop on your conversations. You decide to use encryption so that only you and Alice can read each other’s message even if the encrypted messages are intercepted. Your chosen encryption scheme uses a single key for both encryption and decryption; a message is encrypted using the key, sent to the other person, and is then decrypted with the same key. The security of the entire endeavor hinges upon the key only being known to you and Alice. You generate the key at home on your computer and must make a decision: how should you send the key to Alice? The key must remain a secret, otherwise the security of the channel is compromised. Sending the key over the Internet seems risky (you are a rather paranoid pair setting up a personal encrypted channel after all), as does telling it over the phone. Passing a written copy of the key to Alice in person seems pretty safe. But as you think further, you wonder if malware on your computer spied on the key generation and compromised the channel at the outset. What should you do?

This dilemma brings us to an important point: security is a continuum. No system is either secure or insecure, but is rather secure to some extent. In the example with you and Alice, your options for sending the key are not equally secure. If you pass her the key in person, you can be more confident in the channel’s security than if you had sent it over the Internet or told a third party to share it with Alice. Sharing information via any mechanism carries a risk that it will be overheard, and establishing an encryption key is no different. This applies to our earlier idea for Google to establish a password with you, which is essentially the same problem as you and Alice sharing the encryption key. But it is even more difficult because you and Google do not have the option of communicating in person.

A specific flavor of encryption called asymmetric encryption will help us circumvent the key sharing dilemma. Asymmetric encryption uses two distinct encryption keys called a public key and a private key. A given public and private key pair are generated together and mathematically related. A message encrypted with the public key can only be decrypted by using its matching private key. How can we apply this to the situation with you and Alice? You can each generate your own key pair and give each other your respective public keys. To send a secure message to Alice, encrypt it with her public key and send it to her, knowing that only she can decrypt the message because only she knows her private key. Asymmetric encryption solves the key sharing problem because public keys are intended to be shared. It doesn’t matter if the transmission of your public key to Alice is overheard, because a message that Alice encrypts with it cannot be decrypted without your private key.

Let’s pause to consider the secure channel we just set up with Alice using asymmetric encryption.

- Nobody but the intended recipient can read an encrypted message because only the recipient knows their private key.

- We cannot prove that a message was sent by Alice. If Bob knows your public key, he can send you an encrypted message and pretend to be Alice.

- The channel can be scaled up if we make new security-minded friends. New members simply generate their own key pair and share their public key with the rest of the members. A person’s public key can be shared with as many people as needed.

This scheme seems adequate for you and Alice, but it creaks under the weight of more people. For one, the authorship problem in the second bullet above gets even worse. If there are ten thousand people in your secure channel, how can you tell who is sending you messages? Key sharing with more people is also problematic. The channel relies upon establishing the association of a public key with an identity. You and Alice felt confident in the key-identity establishment because you did it face-to-face. With many more participants, sharing keys in person becomes infeasible. Using other communication channels for key sharing reduces security because you cannot be as confident in the authenticity of the person sharing their public key. You may think you are getting Bob’s public key when in fact you are being tricked by Eve into receiving hers; messages you are sending “to Bob” are actually encrypted with Eve’s public key, and now she can read them. Not all is lost, because again: security is a continuum. A single compromised key-identity establishment only endangers messages sent to that identity, it does not ruin the entire network.

Let us return to our search for confidence that we are actually talking to Google. Google communicates with billions of users, so we need an encryption scheme that scales without breaking down. Asymmetric encryption will still be useful to us, but there are some unsolved problems with our setup that need fixing before we can feel confident we are talking to Google. The one-to-many aspect of asymmetric’s hidden private key and shareable public key lends itself well to the Google-to-billions aspect of real life. Indeed, Google has a private key, and it will be used by the solution we land on for knowing you are talking to Google. But first we need a mechanism for proving authorship of messages and a way of securely establishing the mapping of a public key to an identity.

A key pair is not really associated with a specific person or entity, but in practice they are used as a proxy for identity; demonstrated ownership of the private key is considered proof that the sender of a message is the private key’s owner, and “the private key’s owner” is assumed to be a specific person or organization like Google. If you are confident that a message was written using a certain private key, and that you know the matching public key, and that you know the identity of the public key’s owner, then you can feel confident the message was written by that public key’s owner.

We need a way to transmute private key possession into evidence of authorship. Enter digital signatures like a deus ex machina. A digital signature is a piece of information included alongside a message to prove its authorship and integrity (i.e. that the message was not tampered with). It is generated by an algorithm whose inputs are the message and a private key. The same message will yield distinct signatures if signed with two different private keys, and two different messages will yield distinct signatures if signed with the same private key. A second algorithm can use the signature and public key to check if the attached message was authored by the owner of the matching private key. Since the message is an input parameter of the signing algorithm, the validation step will also detect message tampering. Like encryption, it is computationally infeasible to forge a digital signature. A valid signature indicates that the message was sent by a holder of the private key and that the message was not altered.

We can even encrypt the messages before we generate the signature to keep messages private! This solves a major problem in communicating with Google. If you know Google’s public key, Google can sign every message they send to you and you will know they authored the messages. If we get Google’s public key, everything will fall into place.

One remaining dragon of a problem looms before us: we have to get Google’s public key over the Internet, but getting it over the Internet is insecure. With Alice, the safe mechanism we chose for establishing her public key was receiving it in person. Alice told us her public key in person, and we trust her, so we accepted what she told us. Of course, she could lie, or “Alice” could have been a robot designed to look like Alice. We cannot mathematically prove that a public key is Alice’s. She can give us Bob’s key, and now all the messages we send to “Alice” are readable by Bob. But we trust Alice, and trust is not about math. Trust is about confidence. When you drop a letter in the mailbox, you trust that the postal service will deliver it. The delivery is not at all guaranteed, but you have reasons to feel confident that it will happen: it’s some people’s jobs to deliver it, people generally perform their work duties, the postal service is not bankrupt, etc. The reliance on trust and lack of math in the key sharing step did not ruin your secure channel with Alice, but it did introduce a chink in its armor. It ever so slightly reduces the channel’s security; it is still useful, but we need to be clear-eyed about it. We achieved certainty with math and algorithms wherever possible, but information sharing is inherently risky because people can be dishonest, eavesdroppers can listen in, and bad actors can interfere during transmission. We must accept this inherent risk when using the Internet.

Maybe Google could leverage face-to-face trust to give us their public key. Google could set up physical public key distribution centers where you go in person to receive the key printed on a business card, and you would feel pretty confident that the storefront with a giant Google logo on the facade will not be a fake because Google is loaded and probably has teams of whip smart lawyers being fed near-live photographic evidence of all storefronts via their literal fleet of camera-equipped Google Maps Street View cars, and so a person would have to be crazy to try and set up a fake distribution center. But Google does not run such key centers. Maybe Google could tell their key to some seed group of really honest and trustworthy people, ensuring they are total social butterflies that can share the public key with loads of people, and Google can rest easy knowing it will flow through the population like hot gossip, and you could just ask the people you trust for the key and assume they are not lying.

Of course Google does neither of those things, but the solution for google.com and all other websites does rely on trust. Basically, Google makes you phone a friend.

Seriously. Your web browser came installed with a list of organizations called certificate authorities (CAs) and their public keys. Safari, Chrome, Firefox, whatever, they all came with their own list of public keys owned by CAs that the browser-maker trusts. When you go to google.com, the response will include a public key certificate which says, “I am google.com, my public key is 1234, and certificate authority X can vouch for that”. Along with the certificate, the response includes a digital signature generated with the listed CA’s private key; the certificate itself is treated like any old message to be signed. If your browser came packaged with the listed authority’s public key, then you can verify that they signed google.com’s certificate by using the signature verification step discussed previously for proving message authorship and integrity. If the presented certificate was signed by some CA that your browser does not trust, your browser will throw up scary warning messages because it cannot verify the certificate’s signature.

This is how your browser checks that you are communicating with the website you intended and not a bad actor trying to intercept or interfere with your online activity. Certificates are related to the lock icon that appears next to URLs while you browse the web. In Chrome, you can view a site’s certificate (if it provides one) by clicking the lock and then “Certificate”. The lock’s presence means that the server’s response included a valid certificate for the website you requested.

We should go over the whole thing again, because it is complicated. A certificate states that some public key is associated with some listed subject like google.com. A certificate is sent over the Internet, and we do not really trust the Internet, so we need some way to verify that a certificate is legitimate. A certificate is digitally signed by a CA with its super secret private key, which means that if you have the CA’s public key you can verify the certificate’s legitimacy. Your browser, ultimately your intermediary and gateway to the Internet, trusts some predefined set of CAs and comes installed with their public keys. If the CA that signed a given certificate is on your browser’s list, your browser can verify the certificate and display the mighty lock icon, making you pretty confident that you are talking to Google. The entire point of a certificate is to solve the problem of getting a website’s public key. It provides evidence of an association between a public key and the website in the form of a CA stating that they believe in the association.

If you have been following along well enough, you might be surprised at this solution. Potential issues and vulnerabilities abound:

- How do you know that a certificate signed by one of your browser’s trusted CAs was legitimate? You do not! You just have to trust the CA. That’s it. The math of signature validation may check out, but does the certificate reflect reality? Is the public key-subject association true?

- Certificates for a website are granted to a person that demonstrates control over the website, not necessarily the legitimate owner. What if the website was hacked, and then the hackers asked for a certificate using their own public key rather than the website owner’s public key? Websites get hacked all the time!

- How do certificate authorities get included in my browser’s default list? What if the company that builds my browser was compromised and a malicious fake CA was snuck in? You just have to trust your browser.

- What if a CA’s private key is leaked? How will they even know? How many people at the CA know the key?

- What if someone threatens, bribes, or tricks a CA employee to learn the CA’s private key or be granted a signed but fraudulent certificate?

All of the above concerns are valid. Certificates are not perfect. The risk that you are not talking to Google cannot be eradicated, but security measures reduce it. Certificates are about balancing the needs of websites to serve content without friction and users to seek out content securely. Internet security is about making it harder to do bad things online. Knowing that the security of communications is a continuum allows choices depending on the needs of the task at hand. If communication can be more or less secure, you can require that your bank transactions be more secure than your lunch order. The Internet is an inherently insecure communication channel, and while the current regime is imperfect it is much more secure than sending everything out on the wire in plaintext and hoping nobody cares enough to impersonate Google when you reach out to them.

While understanding certificates does give you well-informed confidence that you are talking to Google or another website, it also demonstrates that they cannot be relied upon for certainty. Certificates are not proof that you are speaking to the right entity, they are merely evidence. The lock icon’s presence does not mean you are talking to Google, it means you are talking to a server with a CA-signed certificate whose subject is google.com. Certificates have been improperly granted before and they surely will be again, because certificate authorities are human organizations and even well-meaning, competent people make mistakes.

The public key certificate regime is a web of hardware, assumptions, and connections that, at its best, increases your confidence that you are talking to the right web server. Complexity breeds opportunity for bad actors. For any given website, this scheme requires setting up complex security software implementing the ideas discussed in this post. Configuring some piece in a certain way or neglecting to update a software package can leave a website and its visitors vulnerable. The system can even cause issues while operating perfectly. Attackers can buy and be granted certificates for domains that are similar to others like gooogle.com or bankofamrrica.com; if a user does not notice the incorrect URL, the lock icon can lull them into false feelings of security.

I used “prove” and “proof” a few times, like when I said that digital signatures prove authorship and integrity of messages. But basically nothing in the public key certificate regime is actually provable. Everything about it is based on decreasing probabilities that you are being tricked by throwing more and more obstacles in front of potential attackers. It is not impossible to break into an encrypted message by luckily guessing the private key, but it is really hard and thus unlikely. You cannot prove that a certificate is true and authentic, but being granted a fraudulent certificate is difficult and so most of the time the certificate is fine and you can go about your life. So how do you know you are talking to Google? If you get a valid certificate, then you do not really know, but you can be pretty sure.